Configuring Microsoft Sentinel archive period for tables at Mass for Data Retention within Log Analytics Workspace

Script & Demo to Set archive period for tables within Log Analytics Microsoft Sentinel!

Last year, I released a post highlighting the importance and differences of Log Tiers in Log Analytics Workspaces for Microsoft Sentinel. In this post, we'll explore how to bulk configure your Archive Settings within a LAWS environment, enabling an easy setup for low-cost archive logs.

Before we begin, thanks for collaborating and Credit to

- thatlazyadmin (Shaun Hardneck (thatlazyadmin)) (github.com).A few months ago, Microsoft released this Tech Community Blog Post about the manual way to set “archive period for tables at Mass”. This is a little bulky and prone to human error.

So lets see how a script can do this with just 4 inputs . . .

# ==============================================================================

# Azure Log Analytics Table Retention Update Script

# Created by: Shaun Hardneck (ThatLazyAdmin)

# Blog: www.thatlazyadmin.com

# ==============================================================================

# This script connects to Azure, allows you to select a subscription and a Log Analytics workspace,

# and sets the retention and archive duration for all tables within the workspace.

#

# Features:

# - Suppresses Azure subscription warnings

# - Silences output after selecting the subscription

# - Exports results to a CSV file

# - Returns to subscription selection after completion

#

# Retention Options:

# 1. Retention: Number of days data is retained for interactive queries.

# Example: 30 (Interactive queries will be possible for the last 30 days of data)

# 2. Total Retention: Total number of days data is retained (including archived data).

# Example: 730 (Data will be retained for a total of 2 years, with archived data available after the interactive retention period)

# ==============================================================================

# Import the necessary modules

# Import-Module Az -ErrorAction SilentlyContinue

# Permanent banner

function Show-Banner {

Clear-Host

Write-Host "============================================" -ForegroundColor White

Write-Host " Microsoft Azure Log Analytics Retention" -ForegroundColor DarkYellow

Write-Host "============================================" -ForegroundColor White

}

# Close out banner

function Show-CloseBanner {

Write-Host "============================================" -ForegroundColor White

Write-Host " Script Execution Completed!" -ForegroundColor DarkYellow

Write-Host "============================================" -ForegroundColor White

}

# Function to authenticate and select a subscription

function Select-Subscription {

Show-Banner

Write-Host "Connecting to Azure..." -ForegroundColor DarkGray

$WarningPreference = 'SilentlyContinue'

Connect-AzAccount -WarningAction SilentlyContinue 3>&1 | Out-Null

$subscriptions = Get-AzSubscription

$counter = 1

$subscriptions | ForEach-Object { Write-Host "$counter. $($_.Name) ($($_.SubscriptionId))" -ForegroundColor DarkYellow; $counter++ }

$subscriptionNumber = Read-Host "Enter the number of the subscription to use"

$selectedSubscription = $subscriptions[$subscriptionNumber - 1]

Set-AzContext -SubscriptionId $selectedSubscription.SubscriptionId -WarningAction SilentlyContinue 3>&1 | Out-Null

Write-Host "Selected subscription: $($selectedSubscription.Name)" -ForegroundColor DarkGray

}

# Function to list workspaces and prompt for retention value

function Update-Retention {

$resourceGroups = Get-AzResourceGroup

$workspaces = @()

foreach ($rg in $resourceGroups) {

$rgWorkspaces = Get-AzOperationalInsightsWorkspace -ResourceGroupName $rg.ResourceGroupName

$workspaces += $rgWorkspaces

}

if ($workspaces.Count -eq 0) {

Write-Host "No workspaces found in the selected subscription." -ForegroundColor Red

return

}

$counter = 1

$workspaces | ForEach-Object { Write-Host "$counter. $($_.ResourceGroupName) - $($_.Name)" -ForegroundColor DarkYellow; $counter++ }

$workspaceNumber = Read-Host "Enter the number of the workspace to use"

$selectedWorkspace = $workspaces[$workspaceNumber - 1]

$resourceGroupName = $selectedWorkspace.ResourceGroupName

$workspaceName = $selectedWorkspace.Name

Write-Host "Selected workspace: $workspaceName in resource group: $resourceGroupName" -ForegroundColor DarkGray

Write-Host "`nEnter retention options (in days):" -ForegroundColor Cyan

Write-Host "1. Retention: Number of days data is retained for interactive queries." -ForegroundColor DarkYellow

Write-Host " Example: 30" -ForegroundColor White

Write-Host "2. Total Retention: Total number of days data is retained (including archived data)." -ForegroundColor DarkYellow

Write-Host " Example: 730 (for 2 years)" -ForegroundColor White

$retentionInDays = Read-Host "`nEnter the retention in days (e.g., 30)"

$totalRetentionInDays = Read-Host "Enter the total retention in days (e.g., 730)"

$tables = Get-AzOperationalInsightsTable -ResourceGroupName $resourceGroupName -WorkspaceName $workspaceName

$results = @()

foreach ($table in $tables) {

Update-AzOperationalInsightsTable -ResourceGroupName $resourceGroupName -WorkspaceName $workspaceName -TableName $table.Name -RetentionInDays $retentionInDays -TotalRetentionInDays $totalRetentionInDays

Write-Host "Updated table $($table.Name) with retention $retentionInDays days and total retention $totalRetentionInDays days." -ForegroundColor Green

$results += [PSCustomObject]@{

TableName = $table.Name

RetentionInDays = $retentionInDays

TotalRetentionInDays = $totalRetentionInDays

}

}

$csvPath = "$workspaceName-TableRetention.csv"

$results | Export-Csv -Path $csvPath -NoTypeInformation

Write-Host "`nResults exported to $csvPath." -ForegroundColor Green

Start-Sleep -Seconds 2

}

# Main script execution loop

while ($true) {

Select-Subscription

Update-Retention

Write-Host "`nOperation completed." -ForegroundColor Cyan

Show-CloseBanner

$choice = Read-Host "`nDo you want to restart the script or end the session? (Enter 'R' to restart or 'E' to end)"

if ($choice -eq 'E') {

Write-Host "Ending session..." -ForegroundColor Cyan

break

}

}So let's talk about why you would want to set the archive time within your sentinel log analytics workspace. Microsoft Sentinel works on a consumption-based model. You are charged for how much data you ingest. Data up to 90 days within your log analytics workspace is free. After 90 days you are charged a specific amount per day per GB that you are retaining in an interactive fashion.

Its important to consider why and how you will use this data past 90 days to avoid unnecessary cost. Do you need it for Threat hunting, Incidence Response or Compliance needs.

Remember if you have specific use cases for keeping logs for a longer time, or you may not wish to archive high volume low fidelity logs such as those from firewalls, this is available to set on a table-by-table basis.

Example

Remember, the first 90 days is free! The total retention time includes the interactive time. For example, if you want a year of total retention, input 365 days.

This gives 90 days of interactive retention, which leaves you with 275 days of archive.

Why consider setting Mass Archive?

Now lets take a moment to circle back to why we need to consider the ingestion tiers and options available. In Microsoft Sentinel, logs are categorized into three main types: Interactive, Basic, and Archive.

Each type has different characteristics and uses, primarily around retention, cost, and query performance.

Interactive

Interactive logs are designed for high-performance queries and real-time analysis. They typically have shorter retention periods, ranging from 30 to 90 days. Due to the need for faster access and more frequent querying, they incur higher costs. These logs are ideal for active threat hunting, incident investigation, and real-time monitoring where up-to-date information is crucial.

Basic

Basic logs offer a balance between cost and performance, making them suitable for less frequent querying. They have Retention fixed at eight days. Any longer retention periods are then set via the archive settings of the table. The cost is lower than that of interactive logs due to less frequent access and slower query performance. Basic logs are suitable for less critical data that doesn't require real-time analysis, such as routine operational monitoring and historical trend analysis. When you change an existing table's plan to Basic logs, Azure archives data that's more than eight days old but still within the table's original retention period.

Archive

Archive logs provide long-term storage of logs with infrequent access requirements. They are designed for much longer retention periods, often spanning several years. The cost is the lowest among the three types due to infrequent access and slower query performance. Archive logs are ideal for compliance, regulatory requirements, and historical data retention where the data is sometimes queried but needs to be retained for extended periods.

Understanding these log types helps in optimizing both performance and cost, depending on the specific needs of your security and operational monitoring requirements.

See my previous post on Log Managment!

Money Money Money - Understanding Microsoft Sentinel's Log Management

When managing security logs, especially in large-scale IT environments, the cost implications of using Microsoft Sentinel's different log types are crucial to understand. Microsoft Sentinel, a sophisticated SIEM platform, offers various types of logs, each tailored to specific needs and cost structures.

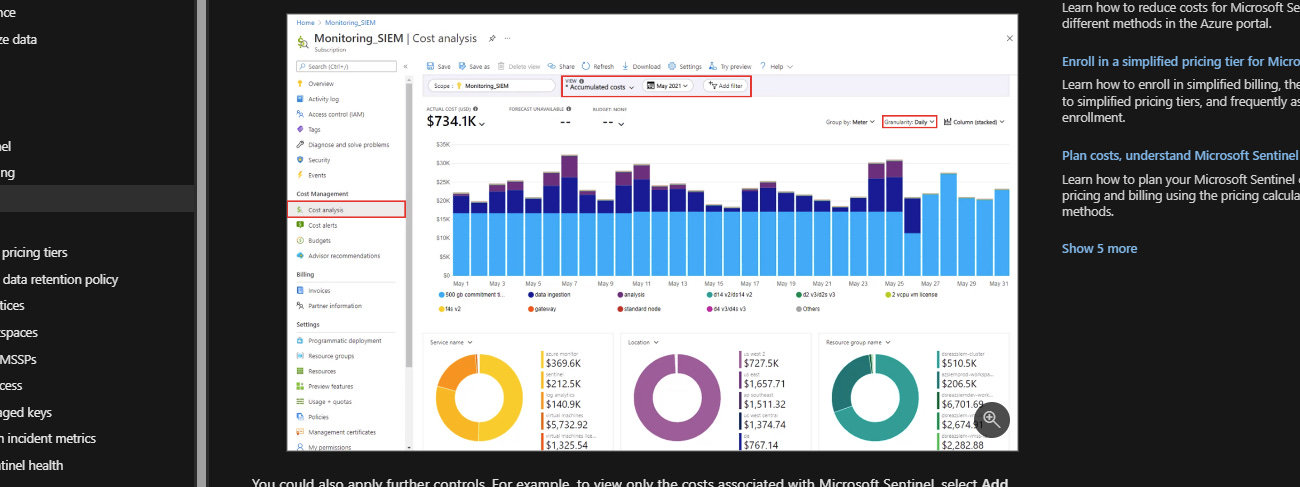

Costs

Basic logs cost about one-fourth of what interactive logs cost. When data is moved to an archive, you need to use search jobs or restore jobs to access it. Search jobs are charged at a much lower rate, and the cost to store the data in an archive is significantly less. You can see a quick comparison on screen and explore specific pricing details by visiting the Microsoft Learn website and searching for log analytics plan costs.

Understanding these costs is crucial for optimization. It's important to stay informed about the pricing model, as I'm sure Microsoft plans to introduce more log and commitment tiers. Keeping an eye on these changes will help you manage costs effectively.

Other Options

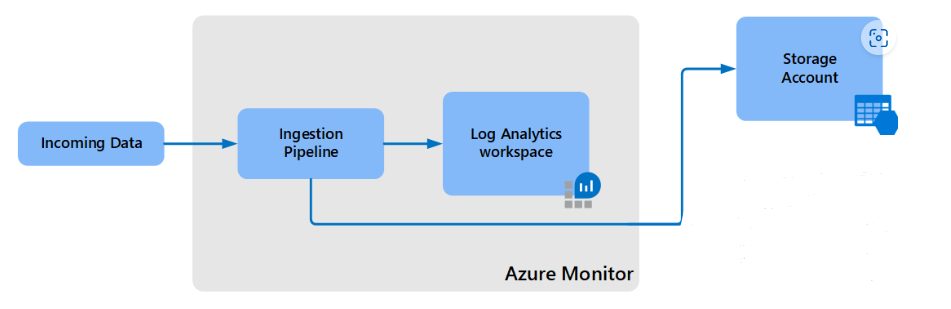

You can also extend the built in archive log functionality with other options for more complex requirements such as:

Azure Data Explorer (ADX) - recommended for users who need to frequently query the data.

Exporting Data to an Azure Storage Account - recommended for users who rarely need to perform queries on the data or have specific querying needs.

Storage account export via Logic Apps - recommended for users who rarely need to perform queries on the data and have their storage account set in a different region than their log analytics workspace.

A link with more info to each of these options can be found here! Image Credits to Inbal Silis & her Co Authors!

Thanks for watching & reading! If you enjoyed this video & post, please follow the blog and check out Sean Hardneck's "That Lazy Admin" on GitHub. See you soon!

#MicrosoftSecurity

#MicrosoftLearn

#CyberSecurity

#MicrosoftSecurityCopilot

#Microsoft

#MSPartnerUK

#msftadvocate