Crowdstrike BSDO - Recovery Options, PC, Server, Hyper V, AWS, Azure - 19th July 2024

How to recover various senarios CroudStrike BSDO - Recovery Options, PC, Server, Hyper V, AWS, Azure - 19th July 2024

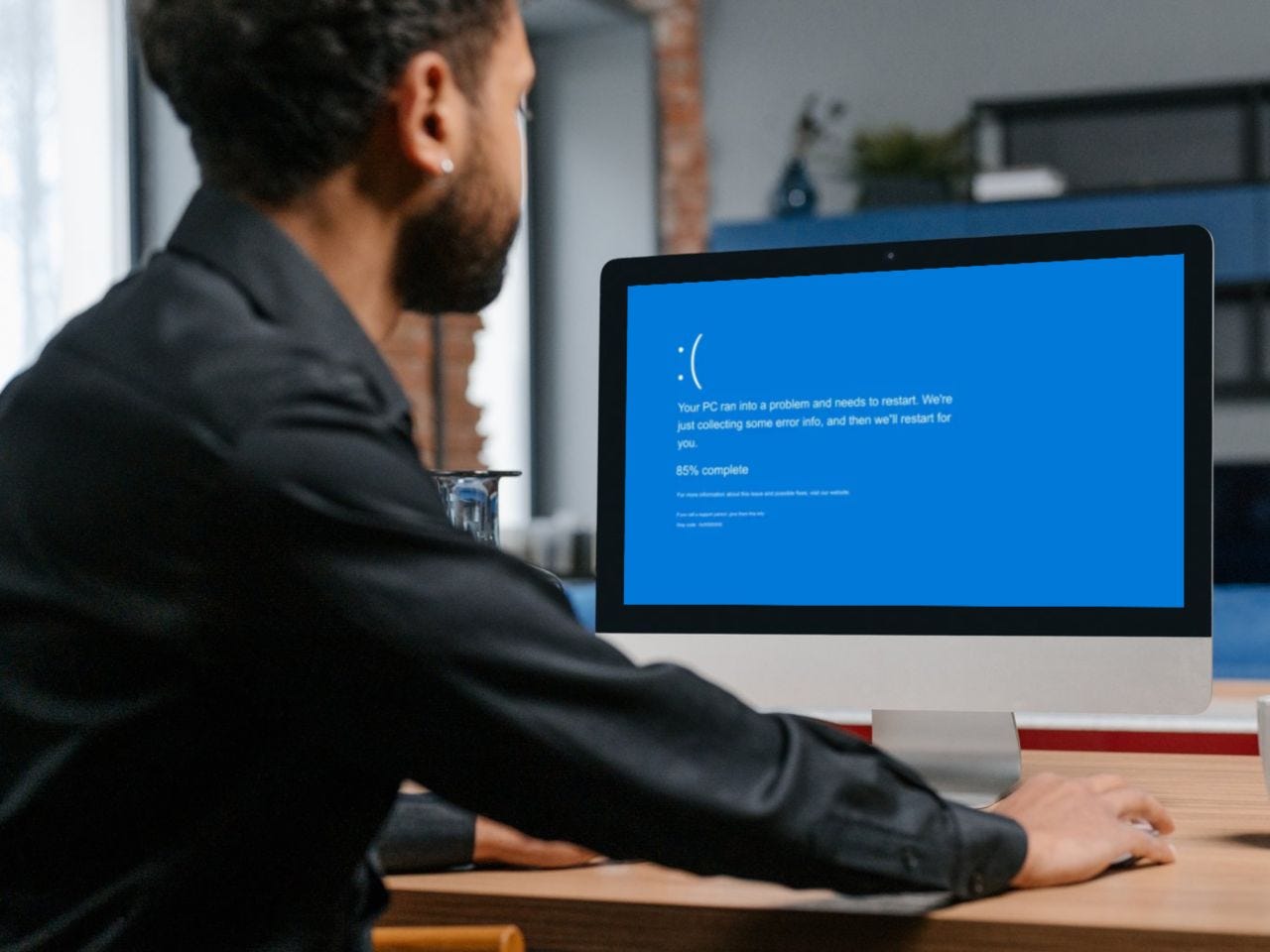

A significant Windows outage has disrupted computer systems across emergency services, banks, airports, and more worldwide. This incident, reportedly linked to a software issue with CrowdStrike Falcon Feed Update, has led to Blue Screen of Death (BSoD) errors. The impact includes grounded flights, interrupted online banking, and halted broadcasting services.

While these manual fix’s are essential, the broader lesson is clear: resilience and preparedness are critical.

I hope a valuable lesson is learnt, awareness is raised of the dependencies and relevance of technology and cyber generally across the world. Hopefully all dependent systems are built to operate fail safe, above all else human life is preserved.

So how can we fix the issue with Crowdstrike BSDO on PC, Server, Hyper V, AWS, Azure

Physical machine

If you got a physical machine —

– After 3 failed boots, windows will go into “Automatic Repair” mode. (You might need your bitlocker pin)

– In the automatic repair page click “Advanced Options” > “Troubleshoot” > “Advanced Options” > “Command Prompt”

– In this command prompt you can cd to the OS drive and rename the Crowdstrike driver

““““““““““““““““

C:

cd C:\Windows\System32\Drivers\CrowdStrike

dir C-00000291*.sys

ren <filename> <filename_old>

““““““““““““““““

Locate the file matching “C-00000291*.sys”, and rename it.

Then exit the command prompt and reboot the machine. Your machine should boot up now

———————

Posting for CrowdStrike BSOD

Physical server

If you got a physical server where you can detach the hard disk —

– Setup a new windows machine to use for troubleshooting

– Detach the hard disk from your broken server and attach it to the new windows machine you’ve setup.

– Go to diskmgmt.msc and look for the hard disk, Right click and bring it online. If the volume is bitlocker encrypted

– you will need a recovery key to access the file system (contact your AD admin)

– Once you can see the file system

– Go to <drive letter>\Windows\System32\Drivers\CrowdStrike – Locate the file matching “C-00000291*.sys”, and rename it.

– Then go back to diskmgmt.msc to detach the drive. Attach it back to the server and the machine should boot up now

—————

Posting for CrowdStrike BSOD

VM on Hyper-v

If you got a Virtual machine on Hyper-V —

– Attach a Windows 8/10 installation iso to the VM. Go to the VM’s settings > Under Hardware > Firmware, Change the boot option to make the iso / DVD drive boot first.

– Now reboot the VM and wait till it gets to the “Install” page. Press “Shift + F10” and this launches a command prompt for you.

– In the command prompt, run:

diskpart

list volume

exit

– Locate the drive letter of your windows volume. (The volume label should say “Windows”, you can also check the size to figure it out). Then switch to that drive.

In the example below, I’ve assumed that it showed Ltr F as the drive with Windows, you should replace F with whatever drive letter you have

““““““““““““““““

F:

cd F:\Windows\System32\Drivers\CrowdStrike

dir C-00000291*.sys

ren <filename> <filename_old>

““““““““““““““““

Locate the file matching “C-00000291*.sys”, and rename it.

Then exit the command prompt and detach the iso. Reboot the virtual machine. Your machine should boot up now

——————————

Posting for CrowdStrike BSOD

VM on AWS

If you got a VM on AWS —

You have options to detach the disk from your VM, download it. Modify it. upload it back and swap the OS drive to this.

or

You have options to detach the disk from your VM, create a new VM, attach the disk to this new VM as a “data” drive. Modify it. Then detach the data drive and attack it back to the original VM

The “Modify it” portion remains the same:

– Go to diskmgmt.msc and look for the hard disk, Right click and bring it online. If the volume is bitlocker encrypted – you will need a recovery key to access the file system (contact your AD admin)

– Once you can see the file system – Go to

<drive letter>\Windows\System32\Drivers\CrowdStrike

– Locate the file matching “C-00000291*.sys”, and rename it.

– Then go back to diskmgmt.msc to detach the drive. Attach it back to the original VM and boot up

—————————————

Posting for the folks affected by the CrowdStrike BSOD

VM on Azure

If you got an Azure VM:

– Create a very basic Windows VM and upload the image to azure to the same resource group as your broken VM. See:

Use Azure Storage Explorer to manage Azure managed disks - Azure Virtual Machines | Microsoft Learn

– Stop the VM from the portal. Go to Settings > Disks > Swap OS disk. Point it to the disk you just uploaded and boot up the machine.

– Attach your original OS disk as a data disk. Now you should be able to go to diskmgmt.msc and look for the hard disk, Right click and bring it online.

– Once you can see the file system – Go to

<drive letter>\Windows\System32\Drivers\CrowdStrike

– Locate the file matching “C-00000291*.sys”, and rename it.

– Then go back to diskmgmt.msc to detach the drive.

– Stop the VM from the portal. Go to Settings > Disks > Detach the data disk. Then click “Swap OS disk”. Point it back to the original OS disk and boot up the machine.

Further updates to follow. Credit to @r3srch3r on X.

Latest official tech alerts: - Updated 13:00 19th!

Details

Symptoms include hosts experiencing a bugcheck\blue screen error related to the Falcon Sensor.

Windows hosts which have not been impacted do not require any action as the problematic channel file has been reverted.

Windows hosts which are brought online after 0527 UTC will also not be impacted

Hosts running Windows7/2008 R2 are not impacted.

This issue is not impacting Mac- or Linux-based hosts

Channel file "C-00000291*.sys" with timestamp of 0527 UTC or later is the reverted (good) version.

Channel file "C-00000291*.sys" with timestamp of 0409 UTC is the problematic version.

Current Action

CrowdStrike Engineering has identified a content deployment related to this issue and reverted those changes.

If hosts are still crashing and unable to stay online to receive the Channel File Changes, the following steps can be used to workaround this issue:

Workaround Steps for individual hosts:

Reboot the host to give it an opportunity to download the reverted channel file. If the host crashes again, then:

Boot Windows into Safe Mode or the Windows Recovery Environment

Note: Putting the host on a wired network (as opposed to WiFi) and using Safe Mode with Networking can help remediation.

Navigate to the %WINDIR%\System32\drivers\CrowdStrike directory

Locate the file matching “C-00000291*.sys”, and delete it.

Boot the host normally.

Note: Bitlocker-encrypted hosts may require a recovery key.

Workaround Steps for public cloud or similar environment including virtual:

Option 1:

Detach the operating system disk volume from the impacted virtual server

Create a snapshot or backup of the disk volume before proceeding further as a precaution against unintended changes

Attach/mount the volume to to a new virtual server

Navigate to the %WINDIR%\\System32\drivers\CrowdStrike directory

Locate the file matching “C-00000291*.sys”, and delete it.

Detach the volume from the new virtual server

Reattach the fixed volume to the impacted virtual server

Option 2:

Roll back to a snapshot before 0409 UTC.

AWS-specific documentation:

Azure environments:

Pease see this Microsoft article.